The content is published under Creative Commons Attribution 4.0 International license.

Reviewed Article:

A Workflow Tool for Archaeological Experiments and Analytics

This paper outlines the background to, and design of, a workflow tool intended to help plan and record archaeological experiments. The tool consists of a user-friendly interface for annotations and drawing flowcharts, and is being developed to work on smartphones, tablets and desktop computers. It is being written as part of a larger project aimed at creating a digital infrastructure that should make it easier for everyone involved in archaeology to share digital resources. Our workflow tool addresses the need to make the results of experimental archaeology more accessible to the wider archaeological community, and to make them more reproducible. It should also help reduce the risk of making mistakes and wasting time and/or resources when running experiments through better planning.

Introduction

A few years ago, while working at what was then the RGZM and is now LEIZA, we were asked to write a proposal for a project relating to experimental archaeology as part of the NFDI4Objects consortium (Bibby,et al., 2023; Thiery,et al., 2023); NFDI standing for the German "Nationale Forschungsdateninfrastruktur" (https://www.nfdi.de/). This project provided us with an opportunity to address the belief that a "lack of sufficient technical support and infrastructure is holding back the development of the discipline" (Schmidt, 2018, p.3). The consortium was eventually approved and the workflow tool, which is being developed in Task Area 3 "Analytics and Experiments", is intended to provide some of that technical support and infrastructure. The workflow tool we aim to produce is intended to allow users (experimental archaeologists) to annotate and visually create a flowchart for their experimental designs. This workflow tool is meant to be "user-friendly": a tool that is simple to use, where the benefits outweigh any costs (in terms of time and effort spent inputting data), but especially one that meets the needs of prospective users. To help identify some of those needs, we reached out to members of the community and read what we could find on experimental design, specifically looking for existing workflows, protocols, wish-lists, guidelines, criticism of the status quo, etc. Our aim was - and is - to create something that can be used by everyone engaged in experimental archaeology, which has been defined as "the use of scientific experimental methods to answer archaeological questions. This covers a spectrum from knapping a simple flint flake to complex and long-term taphonomic experiments" (Schmidt, 2018, p.1). As stated by Eren and Meltzer (2024, p.8): "Experiments are a basic and vital part of the scientific process, and there is no reason archaeological science cannot benefit from them."

We looked at why we do experimental archaeology: we aim towards "The better understanding of the archaeological record, learning 'prehistoric techniques,' replicating artifacts, or teaching students and the public" (Schmidt, 2018, p.1). There is a wide range of reasons for doing experimental archaeology. For example, "flint knapping experiments serve as one of the major areas for deriving referential knowledge for interpreting stone artifacts. Studies of this sort replicate the production or use of specific artifact types as a way to generate behavioral, or sometimes cognitive, analogues that can be projected to the past" (Lin, 2014, p.22). Similarly, there is a wide range of people doing experimental archaeology: "Experimental archaeology [...] is often done by amateurs, it needs practical skills, and the knowledge of craftsmen, and is again a manual activity" (Schmidt, 2018, p.1). When planning our workflow tool, we recognized that we need to incorporate the needs of both professionals and amateurs because both are essentials to conducting experiments. Overall, we want to better integrate that practical, first-hand knowledge, not just to benefit those engaged in experimental archaeology, but in order to benefit the discipline of archaeology as a whole (Schmidt, 2018, p.2).

There are many structural reasons for the current lack of integration, the first being the opposition of professionals and amateurs. In English, the word amateur has slightly negative associations perhaps due to opposition to the word professional, but its Latin root - amātor - refers to love. Other reasons include the divisions between classical and prehistoric or field and theoretical archaeology, a landscape divided between university departments, state services, private excavation companies, museums, hobby archaeologists, etc. One of our aims is to generate some synergy by bringing these groups together in order to create a critical mass which might help identify and respond to needs, such as the lack of clearly defined best practices or professional standards organizations like EXAR and EXARC have been promoting. The newly created Community Cluster "Experimental Archaeology" within the NFDI4Objects consortium (Calandra and Greiff, 2024) should also foster these discussions.

Criticism of experimental archaeology

We found that "Present-day criticism of experimental archaeology is mainly directed at badly defined research questions, lack of practical experience and technical skills on the part of the experimenters, and poorly prepared and poorly conducted experiments" (Schmidt, 2018, p.3). At the very least, "one must clearly state the reason for doing an experiment. What will be learned or achieved? Why is this important? Too often, the purpose of an experiment is assumed to be self-evident" and "there must be a clear description of the different aspects of the experiment. Which materials were used? Which procedures were followed? How and why did these vary from trial to trial?" (Mathieu, 2005, p.110). Our aim is to make it easier to record - to describe - these different aspects, in a standardized fashion, in ways which will make replication easier. Our focus is on the planning and recording of experiments (original design, variations when performing the experiment). Among other things, "The experiment has to be designed in a way that does not advantage any of the possible outcomes", and "observation and interpretation have to be kept separate" (Schmidt, 2018, p.3). Recognized standards for methodology and reporting should help experimental archaeologists gain the respect of their institutional colleagues, and can be improved, partly through education, but also through communication: sharing. Studies of the history and/or philosophy of science often overlook the role dissemination of results plays in the scientific process. French (2007), for example, focuses on observation, and seems to miss the need for dissemination, despite the fact that science is cumulative: we are adding pieces to a giant mosaic. It is possible to observe something unique, but it is not "science" if it is not recorded somehow, and if others are not informed; others must then be convinced, not only of what was observed, but also of the interpretations. In this sense, experiments are not performed in isolation. We already have journals and conferences where people can report their results; our workflow tool should also make it easier to share ideas and plans before performing potentially expensive (in terms of time and resources, if not money; e.g. Lin, Rezek and Dibble, 2018, p.676) experiments, so people with relevant experience can provide constructive criticism and tips that will improve the experiments and increase their chances of success. This will in turn help analyze afterwards what worked or what went wrong. Given our limited resources, we want to avoid repeating mistakes or reinventing the wheel. What might be lacking, though, is a degree of trust. There is always the fear that someone will steal my ideas, and publish them before I do, or before I get a chance to present my results at next year's conference, but in archaeology,

"we have to have a kind of integrity most fields don't need. I need your data, and you need mine, and we have to be able to trust each other on some basic level. There can't be any backstabbing, or working in total isolation, or any of this sitting on a rock in the forest interpreting culture in ways no colleague can duplicate"

(Flannery, 1982, p.277).

Sharing

By publishing and sharing, we increase the chance of success and of having something really worth presenting. Simply publishing results is not useful, however, if we cannot find the information we want (or need), and despite the fact that, in any report, "there should be a discussion (or at the very least a good bibliography) of similar experiments" (Mathieu, 2005, p.110), those who perform experiments recognize that "previous findings have been either insufficiently published for their needs, inaccessible, or scattered" (Tichý, 2005, pp.116-117). Since none of us has time to search for all potentially relevant publications, let's at least try to make that task easier. EXARC compiled a bibliography, but it is incomplete and requires updating. It is intended that the workflow tool will link to the open-source software Zotero in order to build a bibliography, which can be linked to documents. Then there is the added problem that, "Unfortunately, 'failed' experiments, that is, experiments that rejected rather than supported a given theory, are rarely published, although they may have produced a good deal of information which might have helped to inform future research" (Schmidt, 2018, p.3). The lack of publication of negative results is a common problem in science (e.g. Thornton and Lee, 2000, p.208), and many of us probably have a biased view of science because the history is so focused on those successes that are published, and overlooking those failures which are not. After all, "One can with an experimental approach far more reliably reject a claim than prove one, dependent of course on the specific conditions tested" (Eren and Meltzer, 2024, p.8). This is knowledge - experience - lost, possibly after investing a lot of time, effort, and perhaps costly materials. Let's acknowledge its value!

A reflexive process

Learning from our mistakes should encourage us to be more reflexive - more critical - of the entire experimental process, not just the decisions made at each step along the way. As Eren and Meltzer (2024, p.7) state,

"the exploration of an experiment's limitations should become regular practice in published archaeological experiments. In some cases, this discussion might take the form of an explicit and separate "limitations section" (or sub-section). Beyond simply pointing out an experiment's drawbacks, this section could also discuss future potential experiments, alternative experimental conditions, and untested variables and variable interactions. All of this would emphasize the fact that no experiment is perfect, comprehensive, or final."

When planning and running an experiment, it is also important to record and explain decisions. Our workflow tool is designed to plan and record this reflexive process, not just observations.

Workflow tool

At its core, the tool is designed to create an interactive flowchart. It is intended to encourage clear, logical thinking when designing (planning) experiments, but also to be playful: to encourage users to be creative, to consider their options when making decisions. The experimental flowchart also gets annotated (experimental practitioner, type of experiment, instrument settings, etc., see below), which is important for sharing.

Objectives

Such a tool is intended to help experiments design and plan their experiments. It is important that experimenters do this before actually running the experiments in order to avoid misrun experiments. Such experimental designs can even be reviewed before performing the actual experiments as so-called registered reports (e.g. PCI Registered Reports). As every experimenter will know, experiments rarely, if ever, run according to plan. So, the tool should be able to accommodate unforeseen issues and to adjust the plan accordingly, all the while keeping track of what has changed between the initial plan and the actual experiment as run. As mentioned before, we believe that users should reflect about their experimental design. These experimental designs and plans should be savable / exportable in formats that can be easily shared to report on the experiments and on the produced data. Last but not least, user-friendliness is a must, so that experimenters with all backgrounds and IT-skills will be able to use it without intensive training.

Features

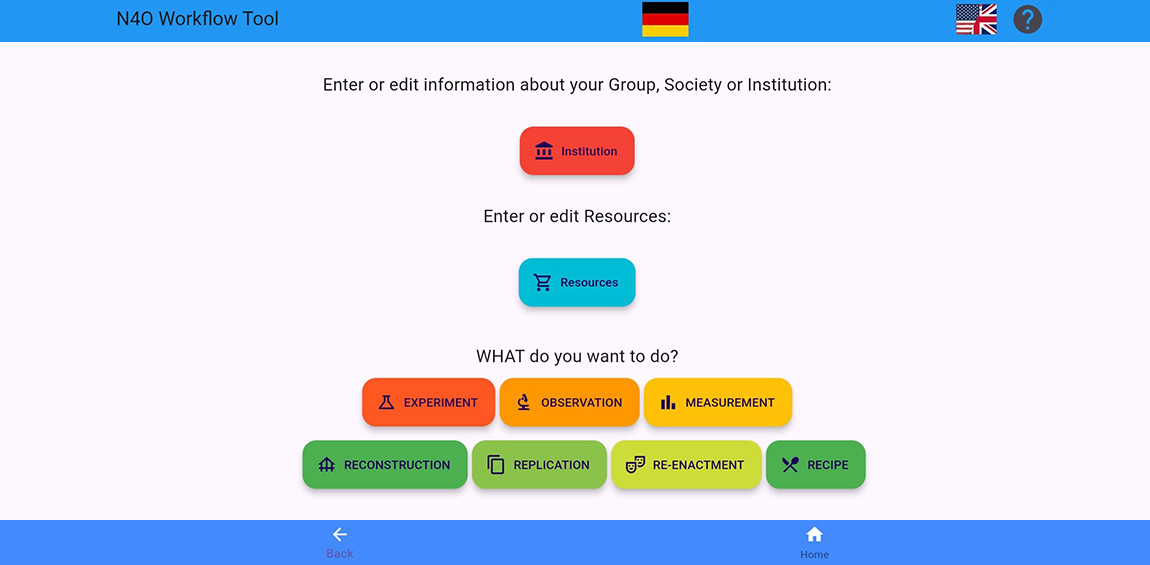

The following features are those that are planned at the moment (See Figure 1). During development, and even more so with users' feedback, adjustments to these features and other features might become important or necessary.

- The flowchart is conceived as a visual tool, i.e. building blocks are positioned and connected to each other by dragging and dropping into the flowchart. Such entities are interconnected with lines. This feature should make the creation of the flowchart intuitive to most users.

- All blocks and connectors can be commented on in order to explain the rationale behind applying this specific action or using this particular tool or instrument setting.

- The flowchart accommodates non-linear workflows. Often, some steps are repeated several times, so loops are necessary. Branching and merging are useful when e.g. a part of the workflow is applied differently to different sets of samples/objects. Dead-ends (e.g. instrument broken so a new instrument was chosen or the production of experimental objects turned out to be impossible) and potential directions for future experiments are other examples of branching.

- Versioning and version control are critical to keep track of the evolution of the experimental design throughout the experiment. A new version of the design should be created every time the design must be adjusted.

- There are numerous other software packages with many interesting functions. Instead of re-creating these functions in the workflow tool, it is much more sustainable to be able to connect to other software packages via so-called Application Programming Interfaces (API). For example, the workflow tool could connect to an Electronic Lab Notebook (ELN) to access the instruments available in a given institute or the data/metadata acquired during the experiments, or interact with other research software frameworks using their APIs written in, e.g., Python or JavaScript to expand its possibilities.

- Data should also be transferrable to the workflow tool, via APIs or via file import. For example, loading terminologies like controlled vocabularies, thesauri or ontologies could support the creation of the workflow by automatically pre-filling the blocks and their connections (Piotrowski, et al., 2014; Thiery and Engel, 2016). Developing such controlled vocabularies for archaeological experiments could be one of the tasks that the NFDI4Objects Community Cluster "Experimental Archaeology" will consider.

- The workflow tool must of course be open-source and should work on the most common platforms (desktop/web, smartphone/tablet) and operating systems (Windows, macOS, iOS, Android).

- Internet access is not always available during experiments, especially for field experiments. The tool should therefore be able to function offline, and then synchronize the data when back online. Authentication is a feature necessary to make sure that only allowed users can access the data.

Current state of the user interface

The user of our workflow tool will work through a series of pages (See Figure 2) of a type that should be familiar to anyone who has ever used a smartphone or the internet. Most of the pages are devoted to populating the flowchart menu with an inventory of building blocks that can be combined into an experimental process (workflow). Filling up the inventory could, admittedly, be tedious, but should only need to be done once; importing such a list (from a file or through an API, see above) could speed up the process. In the future speech-text conversions using AI and NLP techniques could be also implemented (e.g. Tupayachi, et al., 2024; Macioł, et al., 2025). Entries can be revised by adding details later. At present the input forms mostly consist of simple text-entry fields, but some of these will be switched to radio buttons or check boxes, drop-down menus, etc., as needed (and to make the interface more inviting). The priority right now is identifying which fields need to be included, but users should eventually be able to enter their own fields using a drop-down menu.

In the text below, terms used in the workflow tool are quoted on first occurrence.

Persons and institutions

The first time the workflow tool is used, the user will be asked if they are an individual "person" or part of some kind of "institution". If the latter, relevant information can then be entered to identify the institution before entering the names of people available to perform specific tasks; otherwise, users will just identify themselves and move on (subsequent use of the tool will only ask if the user needs to edit any information already entered).

Activities

Individuals and institutions will identify the types of activities they are likely to engage in: these currently include formal experiments, observations (routine work with microscopes, chemical analysis, etc.), measurements, re-enactment, replication or reconstruction (See Figure 2). The experimental-design/hypothesis-development process differs for the different types of activities. Standard, everyday observations, measurements, replication, etc., can skip the hypothesis-building stage if the aim is simply to observe or record a specimen. A related hypothesis -is procedure X more effective than procedure Y?- would be classed as an experiment.

"Observations" and "measurements" are seen as routine activities, and the general intent is aimed at creating a protocol or standard operating procedure that others can replicate, rather than testing some specific hypothesis. Thus, for example, someone will look at and document use-wear under a microscope or measure the relative proportions of different minerals in a clay or rock. The main difference between the two processes reflects the instruments involved: microscope observes and spectrometer measures, but since these distinctions are somewhat arbitrary, the two processes may eventually be combined. "Reconstruction" is intended to refer to structures (e.g. buildings, earthworks), and "replication" for artifacts (e.g. weapons, clothing, pots, vehicles). Either of these activities might be intended to produce "compounds" for use in "experiments" or "observations": using replica tools to build a longhouse which might then be monitored to see how a specific roof design performs under different weather conditions or occupied as part of a "re-enactment". Or the construction process itself might be observed, to see e.g. how much time and labour might be involved. At present, the main distinction is one of location: "structures" can be located on a map, while "replicas" will be portable. In some cases, replication might be treated as an experiment: can this artifact be replicated using this method? Re-enactment needs to be developed. Since it might involve large numbers of persons, either as individuals or as members of various institutions, or individuals acting alone, the "personnel" aspect of the inventory will have to be developed accordingly. Re-enactment might overlap with reconstruction or be part of experimental activities. Experiments are more complicated than the other types of processes modelled in this app. They require a hypothesis, should include a null hypothesis and some discussion about what results might be expected, how the experimental process tests the hypothesis, how the different results might be interpreted, etc., while replication, reconstruction and re-enactment will have a greater need for references (documentary sources).

Each type of experiment can be linked to references, either of published experimental protocols that the user wishes to replicate or follow, to artifacts in museums that someone wishes to replicate, either for the sake of replication or for use in use-wear experiments or to authenticate re-enactment activities, etc. The easiest solution would probably involve a link to some Zotero archive for published works, and online museum collections for artifacts. The experimental design protocol will in turn be a "resource" for subsequent work. Each experimental design includes the possibility to answer questions like: "What other experiments have been undertaken? What problems did they face? What results did they achieve? Why is this new experiment different and/or necessary?" (Mathieu 2005, pp.1-2). After that, the process will be broken up into stages: specimen preparation, the experiment itself, recording observations, and performing something like analysis or reaching conclusions (which could just be a matter of saving data).

Resources

Since beginning the programming process, distinctions were made between "instruments" and "tools", and between "compounds" and "substances" (See Figure 3). Instruments and tools are the basic hardware resources available for performing any of the experiments. Experiment in this context means a process of using some combination of instruments and/or tools to do something to some combination of compounds and/or substances.

Tools are simple things like hammers and shovels, while some selection of e.g. settings, manufacturers, model and/or serial numbers of instruments will need to be recorded. Instruments might also have any number of possible settings which affect performance: a microscope will have magnification and illumination settings, for example, and during the course of an experiment, the user will have to choose which of these to use, even if specific choices are not made while the experiment is being designed. Or a kiln might be capable of operating over a range of temperatures, but the experiment itself is only intended to see how a given type of clay reacts when fired at a specific temperature.

Tools can also be made - or replicated - for the purpose of running an experiment: bone retouchers made for flaking lithics, flint projectile points made to test cutting efficiency or use-wear, etc. Once made - or replicated - they are added to the inventory of available tools. Similarly, the process for preparing wood or bronze for use in reconstructions or experiments can be recorded, and the results added to the inventory of available resources.

Examples of tool "names" include hammer, saw, drill, etc., and "types" of hammers would include sledge, carpenter's, etc. Lists of all the possible types of tools are available in the Linked Open Data Cloud, e.g. Wikidata (https://www.wikidata.org; Schmidt, Thiery and Trognitz, 2022) and GettyAAT, in the NFDI Terminology Service via the TS4NFDI Base4NFDI project and DANTE, and in the NFDI Knowledge Graph Infrastructure via KGI4NFDI and the N4O KGI, and could be imported (see above), but the user will only need to enter an inventory of the tools available in their inventory.

Substances are currently used as generic high-level terms like water, plaster and nails (further specification as sub-terms like water with specific PH values can be added in future), as opposed to compounds like wood or a specific type of flint or bronze, which might need to be expanded to include things like batch number, brand name or quality, as necessary. Arguably, even water and nails are too generic and the distinction between substances and compounds might not be meaningful.

Fields for entering dimensions will be added. A mapping function will allow the user to locate sources of e.g. lithic materials, wood, soil and clay, and where an observation was made, where a re-enactment took place or where a structure was reconstructed.

Photos can be attached to the resources, either directly in the workflow tool, or in external databases imported into the workflow tool.

Tasks

The "tasks" page provides a to do list which lets the user build a rough experimental sequence (See Figure 4). An alternative approach would allow actions (i.e. verbs) to be entered and grouped according to activity areas (carpentry, metal-working, etc.). Ideally, some activities will be associated with specific instruments and tools (i.e. hammering is associated with hammers and nails), but such ontology will be something to consider when the project is developed further, together with the development of an ontology for data exchange in close collaboration with the Community Cluster "Experimental Archaeology" (see above).

Flowchart

Once populated with resources, the tool is intended to be easy to use. Experiments will be designed using a flowchart (See Figure 5). When accessing the flowchart screen, a database query will populate a menu of shapes representing relevant choices (i.e. available resources and associated actions) that the user can select and drag onto the screen to create a workflow. The process will then be structured grammatically: an instrument or tool will be used to perform some action (i.e. task) on some compound or substance. The user can specify which person will perform the task (if there is a choice; experience levels might help indicate which person is most suited to performing a given task), make comments about potential problems or expected interim results, which instrument settings might be most suitable, etc. In terms of design, the flowchart follows the noun-verb-noun structure, represented vertically with an optional link to the person doing any given action (if one person does all the work from beginning to end, adding extra personnel seems pointless) projecting from one side, and the relevant settings for given instruments on the other. A side-link could be added to decisions -traditionally represented as diamonds- identifying which "reference" may have been used as an authority, guidance or inspiration when choosing one tool or method over another, why any number of alternatives were rejected, etc. Recording -and reflexively directing more attention towards- the decision-making process was always one of the main goals when designing the workflow tool. The flowchart allows looping, as when certain actions are repeated any number of times: using a bronze axe to chop a tree a given number of times in order to gauge its effectiveness, for example.

The different types of entities (instruments/tools, compounds/substances etc.) can be color-coded (to be consistent with the framing in the input interfaces). As currently envisioned, there will be no arrows in the vertical stack; the shapes will simply link to each other. Also, there will be a rule enforcing the noun-verb-noun structure (although this could simply be accomplished by switching the selection menus after a choice is made). Different versions of the flowchart can be saved (versioning) as the process is developed, as a means for recording the planning process (someone might want to see where something went wrong). A copy of the final plan can be used to document the actual experiment, record when a specific action was performed, decisions made about specific settings on instruments, comments regarding how things are progressing, photos of instrument set-ups or environmental context (whether it rained on the day someone was supposed to burn charcoal or plaster a wall, for example). The photography package included in the mobile/tablet app also enables photo, video and audio recording of processes.

Once the flowchart has been completed and submitted, a statement should be made about how the experiment, as designed, achieves its goals (i.e. tests the hypothesis). A copy of the completed flowchart can be used as a template while the experiment is being run; it should be possible to amend or tag it, to record the time actions were performed, or show how the actual experiment diverged from the plan. After running an experiment, there will be some kind of post-experiment analysis: did the experiment achieve its aims? Was the hypothesis proven or disproven? What could or should be done differently next time? What further questions were raised by the results? Publication should include a discussion comparing and contrasting results. Since the results "need to be published in a correct and comprehensible fashion" (Schmidt, 2018, p.3), the final output could come in the form of a report.

Sharing

From the beginning, the goal was that users could decide for themselves whether to share their workflows. Sharing would offer several advantages, including easy access to comparable workflows, terminologies (e.g. thesauri or ontologies) and long-term storage, but would require the development of things like standardized terminologies (to make searches easier and results compatible). Theoretically, we could create a list of every kind of object in the universe and every type of activity (or importing the relevant thesauri produced elsewhere) but will be limited to activities relevant to experimental archaeology, for example flint knapping, examining an artifact under a microscope, building longhouses, measuring isotope ratios.

It is expected that users might at first be reluctant to share their data (e.g. Marwick and Pilaar Birch, 2018), though, and that these advantages would have to be made evident; that prospective users would be more likely to use the tool if they feel they have some control over -and can decide for themselves- what will happen with the results; that a critical mass will eventually evolve, and that those who were at first reluctant would eventually try it out and eventually see the advantages of joining the network. All the annotations (metadata) added to the experimental flowchart (experimenter, type of experiment, instrument settings, etc.), are important when sharing: this information makes it possible to e.g. query a database of experiments.

Conclusions

We are trying to avoid a bureaucratic, centralised, top-down approach that does not seem to be suited to the experimental archaeology community, or to the general lack of long-term financing in archaeology, largely through community involvement. Our first step was to give interested parties an idea of where the tool is going, and encourage input regarding which fields need to be included on each type of entity (experimental design [process], resources [nouns], actions [verbs]). After various presentations (Calandra, et al., 2023; Carver and Calandra, 2023; Carver, et al., 2023), a workable prototype was developed in order to give prospective users the opportunity to try the tool for themselves. A webpage was also set up to keep the public informed and to serve as a demo tool (https://tools.leiza.de/workflowtool/).

This tool is a work in progress; much has changed while this paper was being written. The source code for a working version of this tool is available online for downloading, revising, improving, etc. at https://github.com/nfdi4objects/.... The interface is currently in English, but an option for switching to German is being developed.

Acknowledgements

This work has been funded by the German Research Foundation (DFG) via the NFDI4Objects consortium (Grant number NFDI 44/1).

This article is based on programming work and an earlier draft by Geoff Carver (Department of Scientific IT, Digital Platforms and Tools, Leibniz-Zentrum für Archäologie, Neuwied, Germany). We thank Jan Sessing (coordinator NFDI4Objects-TA3, Deutsches Bergbau-Museum, Bochum, Germany) for his support with this project.

A German version of this article will be published in the Düppel Journal in 2025.

Country

- Germany

Bibliography

Bibby, D., Bruhn, K.-C., Busch, A.W., Dührkohp, F., Eckmann, C., Haak, C., Höke, B., Keller, C., Lang, M., von Rummel, P., Renz, M., Senst, H., Stöllner, T., Ulrich, H., Weisser, B. and Wintergrün, D., 2023. NFDI4Objects - Proposal. Available at: < https://doi.org/10/g8ztx2 >.

Calandra, I., Carver, G., Marreiros, J., Hanning, E., Paardekooper, R., Berthold, C. and Greiff, S., 2023. NFDI4Objects - TRAIL3.3: A Workflow Tool for archaeological Experiments and Analytics. 13th Experimental Archaeology Conference, Toruń, Poland, 2 May. Available at: < https://youtu.be/cxuXze7Q_Ig > [Accessed: 20 September 2024].

Calandra, I. and Greiff, S., 2024. NFDI4Objects: Community Cluster - Experimental Archaeology. Available at: < https://doi.org/10/g8ztxz >.

Carver, G. and Calandra, I., 2023. NFDI4Objects - TRAIL3.3: A workflow tool for archaeological experiments and analytics. 20th EXAR Conference, Lorsch, Germany.

Carver, G., Calandra, I., Marreiros, J., Mees, A., Heinz, G. and Florian, T., 2023. TRAIL3.3: Workflow Tool for Archaeological Experiments and Analytics. 14 November. Available at: < https://doi.org/10.5281/zenodo.10122317 >.

Eren, M.I. and Meltzer, D.J., 2024.Controls, conceits, and aiming for robust inferences in experimental archaeology. Journal of Archaeological Science: Reports, 53, p.104411. Available at: < https://doi.org/10/gtwnqh >.

Flannery, K.V., 1982. The Golden Marshalltown: A Parable for the Archeology of the 1980s. American Anthropologist, 84(2), pp.265-278. Available at: < https://doi.org/10/bsgnfk >.

French, S., 2007. Science: key concepts in philosophy. London, New York: Continuum (Key concepts in philosophy).

Lin, S.C., Rezek, Z. and Dibble, H.L., 2018. Experimental Design and Experimental Inference in Stone Artifact Archaeology. Journal of Archaeological Method and Theory, 25(3), pp.663-688. Available at: < https://doi.org/10/grx5xn >.

Lin, S.C.-H., 2014. Experimentation and Scientific Inference Building in the Study of Hominin Behavior through Stone Artifact Archaeology. University of Pennsylvania. Available at: < https://repository.upenn.edu/handle/... > [Accessed: 20 September 2024].

Macioł, A., Macioł, P., Gumienny, G. and Wrzała, K., 2025.A new ontology-based approach to automatic information extraction from speech for production disturbance management. The International Journal of Advanced Manufacturing Technology, 136(7), pp.3735-3752. Available at: < https://doi.org/10.1007/s00170-025-15000-4 >.

Marwick, B. and Pilaar Birch, S.E., 2018. A Standard for the Scholarly Citation of Archaeological Data as an Incentive to Data Sharing. Advances in Archaeological Practice, 6(2), pp.125-143. Available at: < https://doi.org/10/gf5vpk >.

Mathieu, J.R., 2005. For the Reader's Sake: Publishing Experimental Archaeology. EuroREA, 2/2005, p.110.

Piotrowski, M., Colavizza, G., Thiery, F. and Bruhn, K.-C., 2014. The Labeling System: A New Approach to Overcome the Vocabulary Bottleneck'. In: P. Schmitz, L. Pearce, Q. Dombrowski, eds. DH-CASE II: Collaborative Annotations on Shared Environments: metadata, tools and techniques in the Digital Humanities. New York, NY, USA: Association for Computing Machinery (DH-CASE '14), pp.1-6. Available at: < https://doi.org/10/gg9w6z >.

Schmidt, M., 2018. Experiment in Archaeology. In: S.L. López Varela, G. Artioli, G. Campbell, C.D. Dore, G. Fairclough, K.K. Hoffmeister, I. Kakoulli, J.M. Parés, L.A. Barba Pingarrón, J. Thomas, E.C. Wells and L.E. Wright, eds. The Encyclopedia of Archaeological Sciences. John Wiley & Sons, Ltd, pp.1-5. Available at: < https://doi.org/10.1002/... >.

Schmidt, S.C., Thiery, F. and Trognitz, M., 2022. Practices of Linked Open Data in Archaeology and Their Realisation in Wikidata. Digital, 2(3), pp.333-364. Available at: < https://doi.org/10.3390/... >.

Thiery, F. and Engel, T., 2016. The Labeling System: The Labelling System: A Bottom-up Approach for Enriched Vocabularies in the Humanities. In: CAA2015. Keep The Revolution Going, pp.259-268.

Thiery, F., Mees, A.W., Weisser, B., Schäfer, F.F., Baars, S., Nolte, S., Senst, H. and von Rummel, P., 2023. Object-Related Research Data Workflows Within NFDI4Objects and Beyond. In: Proceedings of the Conference on Research Data Infrastructure, 1. Available at: < https://doi.org/10/g8ztx3 >.

Thornton, A. and Lee, P., 2000. Publication bias in meta-analysis: its causes and consequences. Journal of Clinical Epidemiology, 53(2), pp.207-216. Available at: < https://doi.org/10/dz6vxq >.

Tichý, R., 2005. Presentation of Archaeology and Archaeological Experiment. EuroREA, 2/2005, pp.113-119.

Tupayachi, J., Xu, H., Omitaomu, O.A., Camur, M.C., Sharmin, A. and Li, X., 2024. Towards Next-Generation Urban Decision Support Systems through AI-Powered Construction of Scientific Ontology Using Large Language Models-A Case in Optimizing Intermodal Freight Transportation. Smart Cities, 7(5), pp.2392-2421. Available at: < https://doi.org/10.3390/smartcities7050094 >.